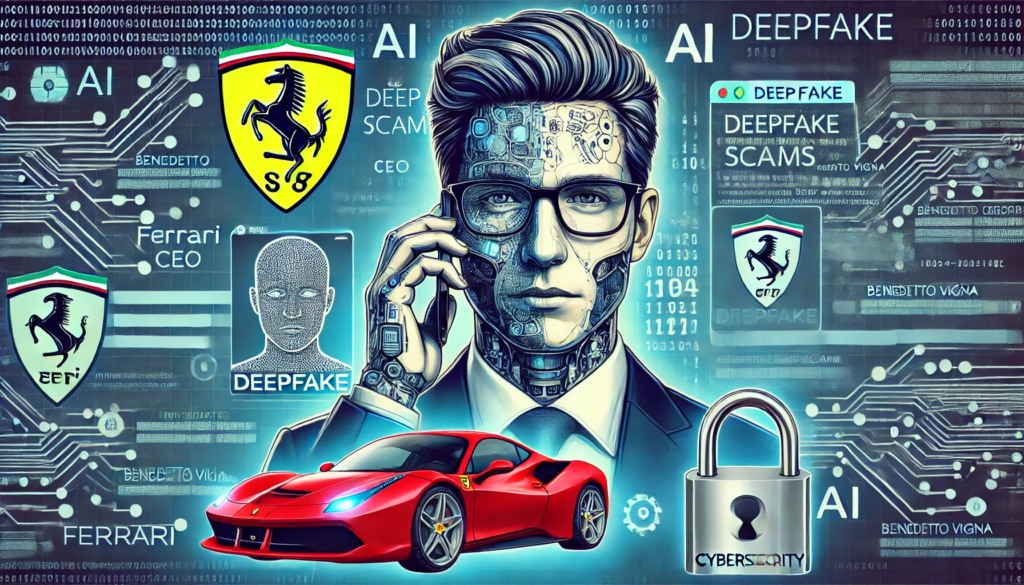

Ferrari CEO Deepfake Scam Highlights Growing Threat of AI-Based Fraud

A sophisticated deepfake scam targets Ferrari, highlighting growing AI threats.

- What are the potential risks and implications of deepfake scams for high-profile individuals and corporations?

- How can companies and executives protect themselves from sophisticated AI-driven scams?

- What future scenarios could unfold if AI-generated deepfakes become increasingly convincing and widespread?

In a world increasingly reliant on digital communication, the threat of AI-generated deepfake scams is becoming alarmingly real. Recently, Ferrari NV, the renowned Italian supercar manufacturer, narrowly avoided a potentially devastating scam involving a deepfake impersonation of its CEO, Benedetto Vigna. This incident, reported by Bloomberg and virtually every major newspaper in the world, underscores the escalating sophistication of AI-driven fraud and its potential repercussions on society.

The Incident Unfolds

It was an ordinary Tuesday morning when a Ferrari executive started receiving unusual WhatsApp messages purportedly from CEO Benedetto Vigna. The messages, which included details about a significant acquisition and requests for utmost discretion, raised immediate suspicions due to the unfamiliar phone number and profile picture. Despite the convincing nature of the impersonation, the executive’s keen observation and a probing question about a recently recommended book revealed the ruse, abruptly ending the call and triggering an internal investigation at Ferrari.

The Mechanics of the Scam

The scam leveraged advanced deepfake technology to simulate Vigna’s voice and mannerisms convincingly. The fraudster’s use of a different mobile number and the pretext of discussing a confidential deal added a layer of authenticity that could have easily deceived someone less vigilant. This incident mirrors a similar attempt reported in May involving WPP Plc CEO Mark Read, indicating a worrying trend in corporate-targeted deepfake scams.

Implications of Deepfake Technology

Deepfake technology, which uses AI to create realistic images, videos, and voice recordings, poses a significant threat to businesses and individuals alike. While these tools have not yet caused the widespread deception many fear, incidents like the Ferrari scam demonstrate their potential. In February, the South China Morning Post reported that a multinational company in Hong Kong lost HK$200 million ($26 million) to a deepfake scam involving fabricated representations of its CFO and other executives during a video call.

The Growing Threat Landscape

According to Rachel Tobac, CEO of cybersecurity training company SocialProof Security, there has been a notable increase in criminals attempting to use AI for voice cloning. Cybersecurity experts warn that it’s only a matter of time before these tools become incredibly accurate and more difficult to detect. Stefano Zanero, a professor of cybersecurity at Italy’s Politecnico di Milano, emphasized that the sophistication of AI-based deepfake tools is expected to improve rapidly, making them a formidable challenge for security professionals.

The Role of Corporate Vigilance

In response to these threats, companies like CyberArk are proactively training their executives to spot deepfake scams. This includes educating employees on the latest tactics used by fraudsters and implementing stringent verification processes. The Ferrari incident highlights the importance of such measures, demonstrating that even the most convincing scams can be thwarted by informed and cautious personnel.

Societal Repercussions

The rise of deepfake technology has far-reaching implications beyond the corporate world. As these tools become more accessible and sophisticated, they could be used for political manipulation, personal vendettas, and other malicious activities. The potential for deepfakes to undermine trust in digital communications and erode public confidence in authentic media is profound.

Ethical and Legal Considerations

The ethical implications of deepfake technology are complex. While the technology itself is neutral, its misuse poses significant ethical dilemmas. There is an urgent need for robust legal frameworks to address the creation and distribution of malicious deepfakes. Governments and regulatory bodies must collaborate to establish clear guidelines and penalties to deter the misuse of this technology.

Future Scenarios

Looking ahead, several scenarios could unfold as deepfake technology continues to evolve. On one hand, we may see a proliferation of deepfake scams and malicious uses, leading to a societal crisis of trust in digital communications. On the other hand, advancements in AI detection tools and increased public awareness could mitigate these risks. Companies and individuals will need to adopt a proactive stance, integrating advanced security measures and fostering a culture of vigilance.

The Path Forward

To navigate the challenges posed by deepfake technology, a multifaceted approach is required. This includes:

- Education and Training: Regular training sessions for employees to recognize and respond to deepfake scams.

- Advanced Detection Tools: Investment in AI-powered tools to detect and flag potential deepfakes.

- Robust Verification Processes: Implementation of stringent verification protocols for all digital communications.

- Legal and Ethical Frameworks: Development of comprehensive legal frameworks to regulate the creation and use of deepfake technology.

Reflecting on the Ferrari Incident

The Ferrari deepfake scam serves as a stark reminder of the vulnerabilities inherent in our increasingly digital world. While the executive’s vigilance prevented a potentially disastrous outcome, the incident underscores the need for heightened awareness and proactive measures across all sectors. As deepfake technology continues to evolve, so too must our strategies for combating its misuse.

Navigating the Digital Age

In conclusion, the Ferrari deepfake scam is a harbinger of the challenges and complexities that lie ahead in the digital age. As AI and deepfake technologies advance, the onus is on businesses, governments, and individuals to stay informed and prepared. By fostering a culture of vigilance and investing in advanced security measures, we can safeguard against the threats posed by these powerful tools and navigate the digital landscape with confidence and integrity.